[Interdisciplinary] [Civil Engineering] [Chemical Engineering] [Electrical Engineering] [Mechanical Engineering]

2024 CAPSTONE PROJECTS

[Interdisciplinary] [Civil Engineering] [Chemical Engineering] [Electrical Engineering] [Mechanical Engineering]

2023 CAPSTONE PROJECTS

[Interdisciplinary] [Civil Engineering] [Chemical Engineering] [Electrical Engineering] [Mechanical Engineering]

MECHANICAL ENGINEERING CAPSTONE PROJECT ARCHIVES

[2022] [2021] [2020]

HEAD-RELATED TRANSFER FUNCTIONS:

LOCALIZABLE AUDO FOR VIRTUAL REALITY

Evan Bubniak

Daniel Pak

Miho Takeuchi

ADVISED BY PROFESSOR MARTIN LAWLESS

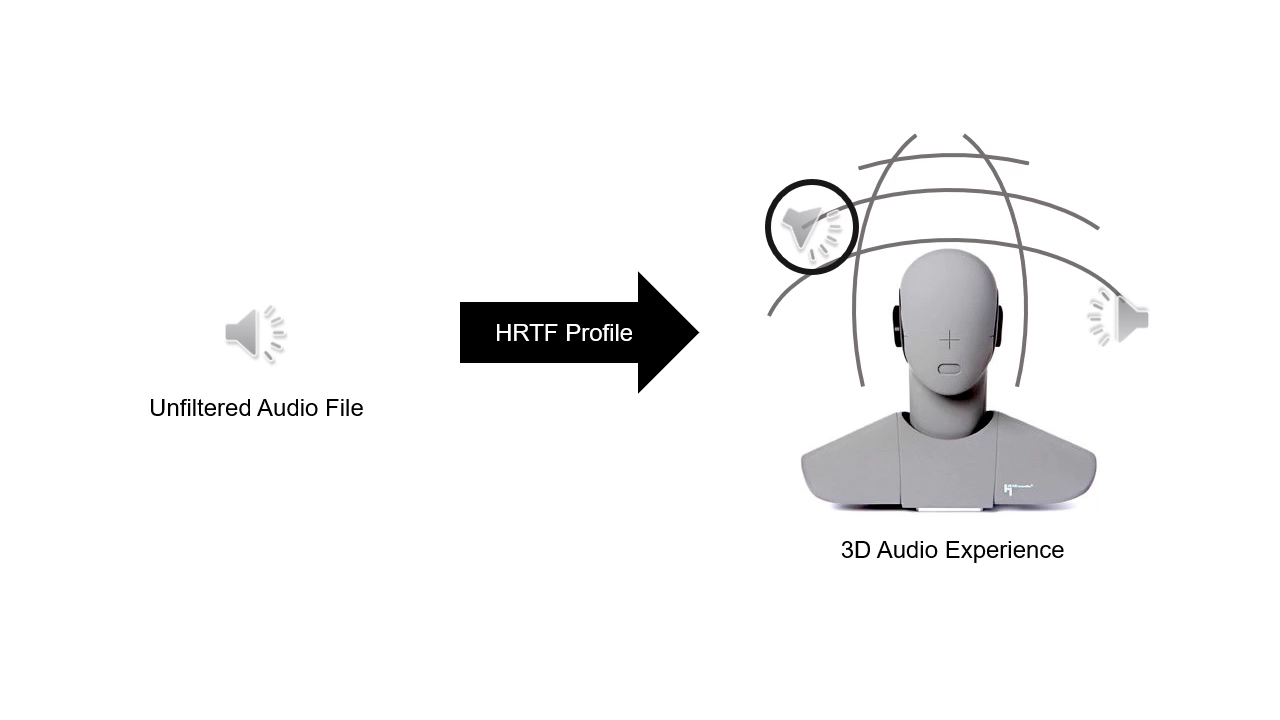

Correctly locating sounds is crucial when creating an immersive audio environment for virtual reality. Synthesizing sounds for headphone playback requires transforming them through filters called head-related transfer functions (HRTFs), containing information about the sound’s location and the user’s anthropometric features. Obtaining an HRTF profile, consisting of HRTFs measured at hundreds of locations arranged spherically around the listener, requires an extensive experimental setup. The goal of this project is to extrapolate an HRTF profile from a sparse amount of HRTF measurements. An artificial neural network, trained on the HUTUBS database containing HRTFs measured at 440 locations for 93 subjects, accepts a small number of HRTFs measured from predetermined locations, selected through grid search optimization, to predict the full profile. Based on these locations, an experimental setup, consisting of full-range speakers on custom-made speaker stands and in-ear binaural microphones, was constructed to collect a few HRTFs from participants. The participants’ HRTF profile is then extrapolated for audio localization in virtual reality.

LINK TO CONTENT